The Ultimate Goal of the ENCS Humanoid Robotics Project is to develop a robot that the average person cannot distinguish from another person. Our human-like robot should be able to interact with a person using a natural language interface, implementing voice generation and recognition. Our robot should be able to recognize who it is conversing with and remember previous conversations, using that information in the current conversation. The intent is to teach the robot to learn, not how to respond.

Meet KEN.

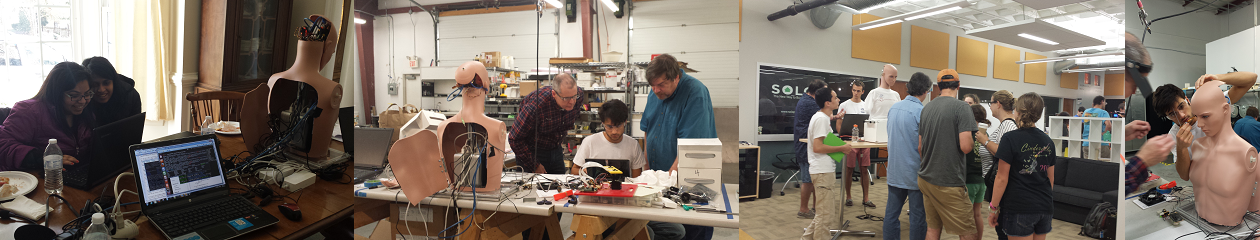

KEN is the first generation robot the team is working on. Made from a mannequin upper body and head, KEN is human-like in appearance. He has the ability to see, hear, speak, and move his neck. He detects faces and learns to recognize the people he meets. He can carry on a conversation with you.

KEN is a platform for robotic engineering discovery and development. With this robot, we are engaging IEEE members in a collaborative effort to develop professional skills and engage students and our community in science, technology, engineering, and mathematics (STEM).

Overview

Everyone involved in this project understands that building a humanoid robot is hard. Really hard. But that is, in effect, what makes it both fun and worthwhile. We set a big goal, and then we work at it step by step, doing what we can to move ourselves closer to it. By doing this, not only do we learn more about how to build a robot, but by attempting to mimic human abilities, we learn more about what it means to be human. That is the beauty of this project.

KEN is just the start. The initial focus is on building a human looking robot that can participate in natural human interactions. To do this, we needed to give KEN the ability to see, hear, and speak. Tiny camera’s mounted in his eyes give him the potential (not implemented yet) for binocular vision and depth perception. So far, this is the most human-like ability KEN has. Of course, he has tunnel vision and fairly low resolution, but it’s a start. His hearing is provided by a conference room microphone, and he speaks through a speaker. These components are less human-like than his eyes, but they serve the purpose for now and allow us to concentrate on the integration aspects of the system.

KEN is just the start. The initial focus is on building a human looking robot that can participate in natural human interactions. To do this, we needed to give KEN the ability to see, hear, and speak. Tiny camera’s mounted in his eyes give him the potential (not implemented yet) for binocular vision and depth perception. So far, this is the most human-like ability KEN has. Of course, he has tunnel vision and fairly low resolution, but it’s a start. His hearing is provided by a conference room microphone, and he speaks through a speaker. These components are less human-like than his eyes, but they serve the purpose for now and allow us to concentrate on the integration aspects of the system.

KEN uses voice activity detection and speech recognition to record spoken phrases and translate them to text. The artificial intelligence system processes the text into a response which is spoken back using a text to speech synthesizer.

KEN is constantly searching the video image frames from his eye cameras for human faces. He moves his head to center a face in his gaze. In the background, he records information about the faces he sees to allow him to recognize the face again and to associate the face with a name.